Artificial intelligence (AI) refers to computer systems or machines capable of performing tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, and language translation.

AI has seen rapid advancements and adoption across various industries in recent years, leading many experts to believe it will profoundly impact human life in the coming decades.

A Brief History of AI

The concept of intelligent machines has fascinated humans for centuries. But AI as a proper field of computer science can be traced back to the 1950s, when scientists and mathematicians started exploring the possibility of creating intelligent machines.

In 1950, British mathematician Alan Turing published a landmark paper titled “Computing Machinery and Intelligence,” which proposed the Turing Test to determine if a machine can exhibit intelligent behavior equivalent to or indistinguishable from a human’s. This thought experiment laid the foundations for artificial intelligence research.

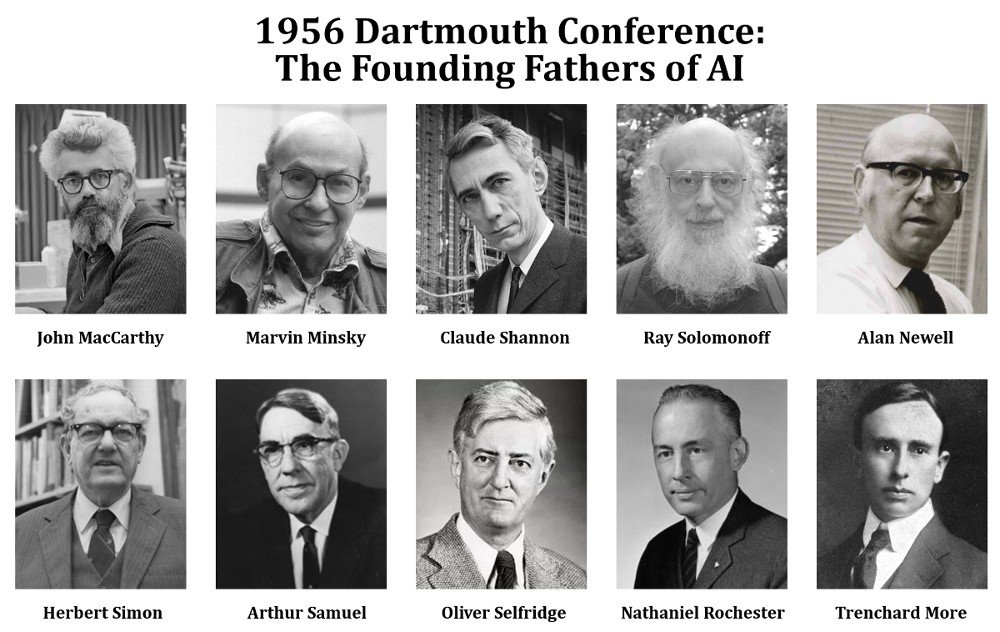

The term “artificial intelligence” was coined in 1956 at the Dartmouth Conference, where leading scientists such as John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester convened to discuss the feasibility of machine intelligence.

In the following decades, AI research experienced ups and downs, a period known as the “AI winter” when funding and interest in AI severely dried up. But in the 21st century, AI started gaining prominence again due to increased computational power, the availability of large datasets, and algorithmic breakthroughs.

What is artificial intelligence?

Artificial intelligence refers to the ability of computer systems or machines to exhibit human-like intelligence and perform tasks like sensing, comprehending, acting, and learning.

It is a broad field that encompasses:

- Machine learning – The ability of AI systems to learn from data without being explicitly programmed. Instead of hardcoded software rules, machine learning uses algorithms to analyze data, learn from it, and make predictions.

- Computer vision – The ability to process, analyze, and understand digital images and videos. It allows AI systems to “see” and comprehend visual data.

- Natural language processing – The ability to understand, interpret, and generate human language, including speech recognition, translation, and text analytics.

- Robotics – Development of intelligent physical agents that can move and interact with the environment, complementing their computational capabilities with physical bodies.

- Expert systems – Programmed knowledge and rules that mimic the decision-making ability of a human expert. They are widely used for applications like medical diagnosis.

- Speech recognition – Enables AI systems to process and respond to human language commands spoken into microphones or other devices.

- Advanced analytics – Sophisticated data analysis techniques to find patterns, derive insights, guide decision-making, and make predictions.

Applications of AI

The unique capabilities of AI algorithms have enabled their application across various industries and use cases. Here are some major applications of AI:

1. Healthcare

- Diagnosis of diseases by analyzing medical images, patient data, and medical records

- Personalized medicine and treatment recommendations

- Drug discovery and development of new pharmaceuticals

- Virtual nursing assistants, chatbots, and other tools to improve patient care

2. Transportation

- Autonomous vehicles like self-driving cars

- Intelligent traffic management systems

- Smart public transportation and ride-hailing services

- Logistic optimization for supply chain and delivery

3. Banking and Finance

- Fraud detection for credit cards and insurance

- Algorithmic trading and stock market prediction

- Personalized banking through virtual assistants and chatbots

- Credit assessment and loan underwriting

4. Cybersecurity

- Malware, intrusion, and anomaly detection

- Continuous authentication and risk-based adaptive access control

- Automated threat intelligence, behavior analytics, and forensics

5. Retail and eCommerce

- Product recommendations and personalized shopping

- Supply chain and inventory optimization

- Predictive analytics for targeted marketing and advertising

- Intelligent customer service chatbots

6. Defense

- Surveillance, threat detection, and intelligence gathering

- Data fusion from multiple sensors

- Simulation, testing, and war gaming

- Computer vision for guidance systems and weapons targeting

7. Media and Entertainment

- Video game AI for realistic NPCs, bots, and interactive narratives

- Recommender systems for video and music streaming services

- Natural language generation for news stories and sports reporting

- Deepfakes and AI-generated art, audio, video, and text content

8. Business Operations

- Predictive analytics for forecasting, budgeting, and risk modeling

- Intelligent process automation with robotic process automation (RPA)

- HR analytics for talent acquisition and retention

- Smart business applications like virtual assistants, chatbots, and voice-enabled tools

Techniques and Algorithms Used in AI

Many different techniques and algorithms are used to build and train AI systems. Here are some of the most common and essential techniques:

1. Machine Learning

Machine learning gives computers the ability to learn without explicit programming. Algorithms analyze data, identify patterns, and learn from these observations.

The significant types of machine learning include:

- Supervised learning – Algorithms are trained using labeled datasets where the desired output is already known. Examples include classification and regression.

- Unsupervised learning – Algorithms are given unlabeled data and asked to find hidden patterns and groupings without human guidance. Clustering is a common unsupervised technique.

- Reinforcement learning – Algorithms interact dynamically with an environment, learning through trial-and-error which actions yield the highest reward. I am used to mastering games and control systems.

2. Deep Learning

Deep learning is a subset of machine learning based on artificial neural networks modeled after the human brain. It uses multiple layers of neural networks to extract higher-level features from raw input data.

Standard deep learning techniques include convolutional and recurrent neural networks.

3. Computer Vision

Computer vision applies machine learning to process and analyze visual data using techniques like:

- Object classification – Labeling and categorizing objects in images or videos

- Object detection – Locating instances of objects within the data

- Image segmentation – Partitioning visual data into distinct regions or categories

- Image generation – Creating and synthesizing realistic images and videos

4. Natural Language Processing

NLP algorithms allow computers to understand, interpret, and generate human language. Key capabilities include:

- Text analysis – Extracting semantics, sentiments, topics, and entities from text

- Speech recognition – Transcribing spoken audio into text

- Language translation – Automating translation between languages

- Dialogue systems – Managing conversations through voice-controlled chatbots

5. Planning and Optimization

These techniques automate decision-making and allow AI systems to plan actions and optimize performance strategically. Methods like linear programming, gradient descent, and genetic algorithms are used.

The Promise and Potential Risks of AI

AI has enormous potential to transform our lives in the coming years. However, researchers also warn about its risks and limitations.

1. The Promise of AI

- Automating mundane and repetitive tasks to increase productivity

- Personalizing services like healthcare, education, and retail as per individual needs

- Democratizing access to services like translation for people across language barriers

- Enhancing human capabilities through human-AI collaboration rather than pure automation

- Accelerating scientific discovery by analyzing massive amounts of data

- Improving transportation, logistics, and infrastructure management

- Increasing accessibility for people with disabilities through AI assistants and interfaces

2. The Potential Risks of AI

- Job losses due to increased automation and redundancy of human roles

- Exacerbating existing biases and discrimination present in data used to train AI models

- Lack of transparency in AI decision-making leads to harmful errors and unforeseen consequences.

- Cybersecurity vulnerabilities that threat actors could exploit

- Loss of privacy through expanded surveillance and predictive analytics

- Technological unemployment if job creation lags behind destruction

- Existential risk from advanced AI systems becoming uncontrollable superintelligences

The Future of AI

Here are some exciting frontiers being explored in AI research that can shape its future:

- Hybrid AI systems combine multiple techniques like machine learning and knowledge graphs to overcome limitations.

- Specialized AI that focuses on narrow tasks versus artificial general intelligence (AGI).

- More human-like reasoning and common sense through deep learning, reinforcement learning, and natural language processing.

- Fairness, transparency, and explain ability in AI to build trust and prevent harm.

- On-device AI that runs efficiently on phones and edge devices without relying on the cloud.

- Multi-modal AI that leverages multiple inputs like text, speech, and vision to provide enriched experiences.

- Further advancement in robotics through embodied AI agents and brain-computer interfaces.

- Generative AI models can create original content like images, videos, and text.

Though AI has achieved impressive results, researchers believe human-level AI or AGI is still far out. But in the coming decade, AI will transform significant industries, enhance human capabilities, and potentially raise risks if not developed responsibly. The path ahead for AI will be defined by leveraging its strengths while mitigating its dangers.

Frequently Asked Questions on Artificial Intelligence

Here are some common questions people have about artificial intelligence and its impact:

What are the risks associated with artificial intelligence?

Some significant risks associated with AI include job automation leading to widespread unemployment, biases in data and algorithms causing discrimination, loss of privacy, lethal autonomous weapons, and the existential risk of superintelligence. However, researchers are working on techniques to build safe and ethical AI.

Will AI overtake human intelligence?

AI has exceeded human capabilities in specific narrow tasks, but researchers believe human-level artificial general intelligence or ‘superintelligence’ will not be achieved for decades. The path to AGI remains to be determined.

Is artificial intelligence good or bad?

Like any technology, AI itself is neither good nor bad. Its impact depends on how it is developed and used. AI has massive potential for good but poses risks if not handled carefully. We need to direct AI for beneficial purposes while addressing its dangers proactively.

How is artificial intelligence used in the real world?

AI is used in real-world applications like healthcare, transportation, finance, defense, retail, entertainment, cybersecurity, farming, and natural language processing. Its ability to analyze data, automate tasks, and make predictions is transforming significant industries.

Will AI replace human jobs?

AI will automate specific routine and repetitive jobs. However, researchers believe it will also create new types of jobs. The net impact on employment remains to be determined – it may displace specific roles but not lead to mass unemployment if job creation keeps pace with disruption. We need policies to manage this transition.